Audio sampling in music production involves taking a small portion of a sound recording and using it in a new composition. This process allows producers to incorporate existing sounds into their music, adding depth and texture to their tracks. Samples can be manipulated in various ways to create unique sounds and melodies, making them a valuable tool for producers looking to experiment with different styles and genres.

Common techniques used to manipulate audio samples include time stretching, pitch shifting, and adding effects such as reverb or distortion. Time stretching allows producers to change the tempo of a sample without affecting its pitch, while pitch shifting alters the pitch of the sample without changing its tempo. These techniques can be used creatively to transform a simple sample into a complex and dynamic element within a track.

Powersoft, the audio amplification, signal processing and transducer systems specialist company from...

Posted by on 2024-03-20

Meyer Sound announced the acquisition of a company called Audio Rhapsody (not to be confused with hi...

Posted by on 2024-03-20

Roon announced the release of Nucleus One, the newest addition to the Nucleus music server product l...

Posted by on 2024-03-19

Peerless Audio, the component business of Tymphany producing transducers since 1926, has announced t...

Posted by on 2024-03-19

SoundHound AI is evolving its approach with voice artificial intelligence (Voice AI) and announced a...

Posted by on 2024-03-19

The use of audio samples in commercial music production is subject to copyright laws, and producers must obtain the necessary licenses to use samples legally. This typically involves paying a fee to the copyright holder or obtaining permission to use the sample in a commercial release. Failure to obtain the proper licenses can result in legal action and financial penalties, so it is important for producers to ensure that they have the rights to use any samples in their music.

Producers can ensure that their sampled audio is high quality and clear by using professional recording equipment and software. Recording samples in a controlled environment with high-quality microphones and soundproofing can help capture clean and crisp audio. Additionally, using editing tools to remove background noise and enhance the clarity of the sample can further improve its quality.

Popular software programs used for audio sampling include Ableton Live, FL Studio, and Logic Pro. These programs offer a range of tools and features for manipulating samples, such as time stretching, pitch correction, and effects processing. Producers can use these programs to import, edit, and arrange samples within their tracks, allowing for endless creative possibilities in music production.

Artists can find and select audio samples to use in their music from a variety of sources, including sample libraries, online databases, and vinyl records. Sample libraries offer a wide selection of pre-recorded sounds and loops that producers can use in their tracks, while online databases provide access to a vast collection of samples from different genres and styles. Vinyl records are also a popular source of samples, as they often contain unique and rare sounds that can add a vintage or nostalgic feel to a track.

When using audio samples in music production, producers must consider copyright issues to avoid legal complications. Sampling copyrighted material without permission can lead to infringement claims and potential lawsuits, so it is important to obtain the necessary licenses before using any samples in a commercial release. Producers can also use royalty-free samples or create their own original sounds to avoid copyright issues and ensure that their music is legally compliant.

Room acoustics play a crucial role in determining the sound quality in a recording studio. The size, shape, materials, and layout of the room can all affect how sound waves travel and interact within the space. Reverberation, reflections, standing waves, and frequency response can all be influenced by the acoustic properties of the room. Proper acoustic treatment, such as soundproofing, diffusers, absorbers, and bass traps, can help minimize unwanted reflections and reverberations, resulting in a more accurate and balanced sound in recordings. Without adequate acoustic treatment, the room may introduce coloration, distortion, and uneven frequency response, ultimately impacting the overall quality of the recorded audio. Therefore, it is essential for recording studios to carefully consider and optimize their room acoustics to achieve the best possible sound quality.

Latency in audio production refers to the delay between the input of a sound signal and its output. This delay can negatively impact the recording and mixing process by causing synchronization issues and making it difficult to accurately monitor and adjust audio levels in real-time. To minimize latency, audio producers can utilize low-latency audio interfaces, high-speed processors, and optimized software settings. Additionally, using direct monitoring techniques, such as hardware monitoring or zero-latency monitoring, can help reduce latency during recording sessions. By addressing latency issues through these methods, audio producers can ensure a smoother and more efficient production workflow.

Phantom power is a method of delivering electrical power to microphones, typically condenser microphones, through microphone cables. It is important for condenser microphones because they require power to operate their internal circuitry and produce a signal. Phantom power is typically supplied at a voltage of 48 volts and is sent through the same cables that carry the audio signal from the microphone to the preamp or mixer. This eliminates the need for additional power sources or batteries for the microphone, making it more convenient and reliable for recording purposes. Additionally, phantom power allows for longer cable runs without signal degradation, making it a crucial feature for professional audio setups.

Wireless microphones are typically configured and synchronized with receivers through a process known as frequency coordination. This involves selecting appropriate frequencies for the microphones to operate on, taking into account factors such as interference from other wireless devices and the availability of clear channels. Once the frequencies are chosen, the microphones are paired with their corresponding receivers using infrared synchronization or manual input of frequency settings. This ensures that the microphones and receivers are communicating on the same frequency, allowing for seamless audio transmission. Additionally, some wireless microphone systems may utilize automatic frequency scanning and synchronization features to simplify the setup process for users. Overall, proper configuration and synchronization of wireless microphones with receivers is essential for achieving reliable and high-quality audio performance in various applications such as live performances, presentations, and recording sessions.

A compressor in audio processing is a dynamic range processor that reduces the volume of loud sounds or amplifies quiet sounds to create a more consistent level of audio output. By using threshold, ratio, attack, release, and makeup gain controls, a compressor can help control the dynamics of a sound signal, making it more balanced and easier to mix in a recording or live sound setting. Compressors are commonly used in music production, broadcasting, and live sound reinforcement to improve the overall clarity and impact of audio signals. They can also be used creatively to add punch, sustain, or character to a sound source. Overall, a compressor plays a crucial role in shaping the dynamics and tonal quality of audio recordings.

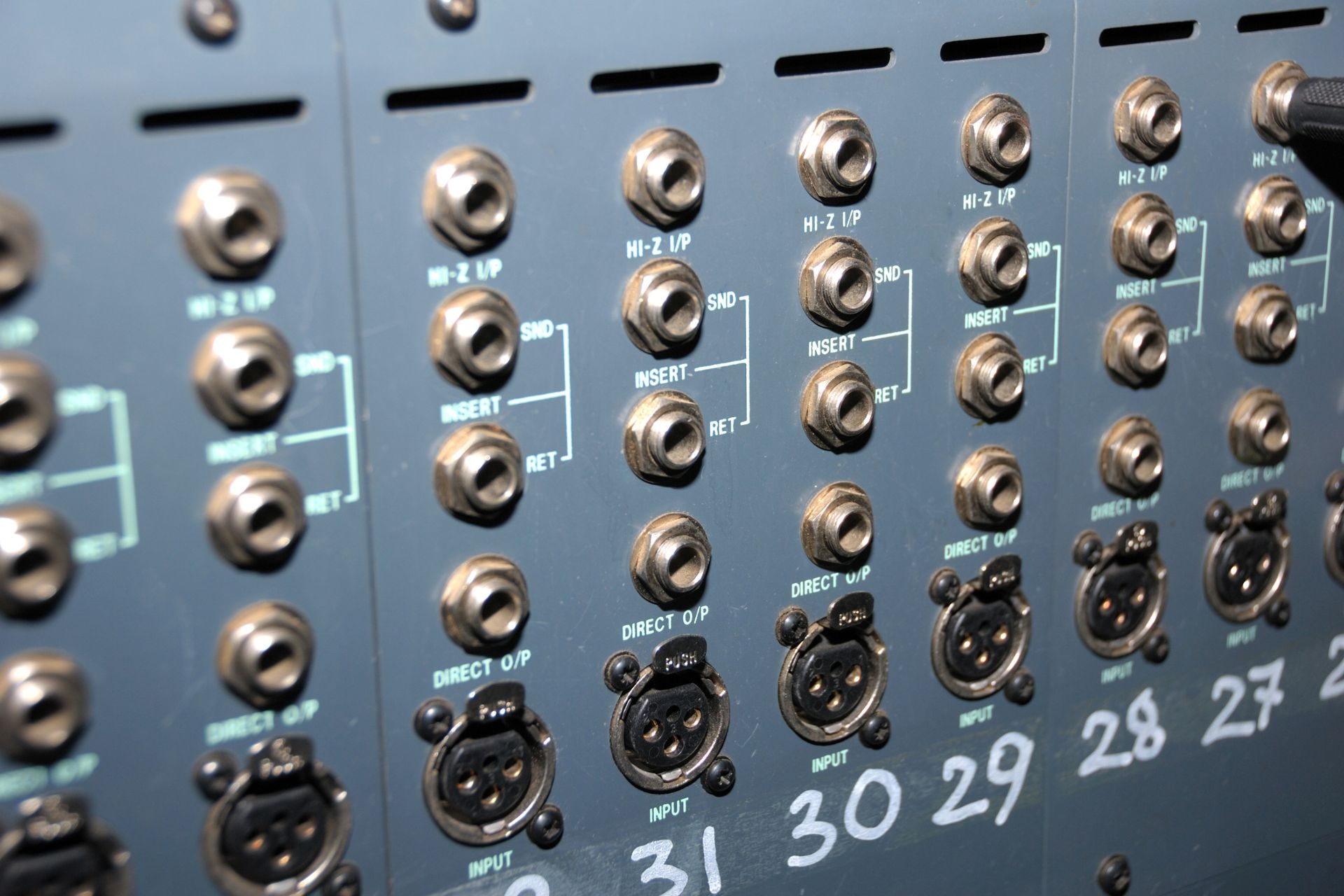

Mixing consoles utilize a combination of channels, buses, faders, and routing options to manage multiple audio signals simultaneously. Each channel on the console is dedicated to a specific audio input, such as a microphone or instrument, allowing the user to adjust the volume, tone, and effects for each individual signal. Buses on the console enable the user to group together multiple channels and process them as a single unit, making it easier to control and manipulate multiple signals at once. Faders on the console allow the user to adjust the volume levels of each channel and bus, while routing options determine how the audio signals are sent to various outputs such as speakers or recording devices. By utilizing these features, mixing consoles can effectively manage and mix multiple audio signals in real-time.